Source: Farnam Street

“Nor public flame, nor private, dares to shine;

Nor human spark is left, nor glimpse divine!

Lo! thy dread empire, Chaos! is restored;

Light dies before thy uncreating word:

Thy hand, great Anarch! lets the curtain fall;

And universal darkness buries all.”

― Alexander Pope, The Dunciad

***

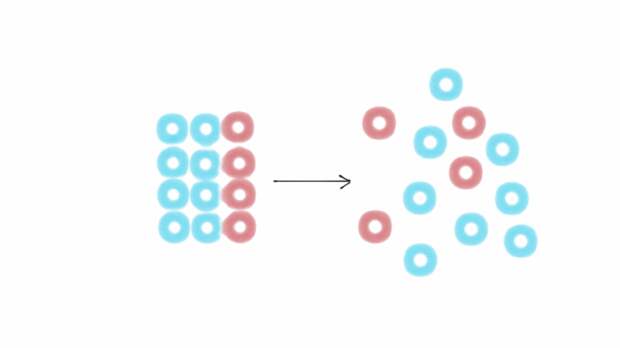

The second law of thermodynamics states that “as one goes forward in time, the net entropy (degree of disorder) of any isolated or closed system will always increase (or at least stay the same).

”[1] That is a long way of saying that all things tend towards disorder. This is one of the basic laws of the universe and is something we can observe in our lives. Entropy is simply a measure of disorder. You can think of it as nature’s tax[2].Uncontrolled disorder increases over time. Energy disperses and systems dissolve into chaos. The more disordered something is, the more entropic we consider it. In short, we can define entropy as a measure of the disorder of the universe, on both a macro and a microscopic level. The Greek root of the word translates to “a turning towards transformation” — with that transformation being chaos.

As you read this article, entropy is all around you. Cells within your body are dying and degrading, an employee or coworker is making a mistake, the floor is getting dusty, and the heat from your coffee is spreading out. Zoom out a little, and businesses are failing, crimes and revolutions are occurring, and relationships are ending. Zoom out a lot further and we see the entire universe marching towards a collapse.

Let’s take a look at what entropy is, why it occurs, and whether or not we can prevent it.

The Discovery of Entropy

The identification of entropy is attributed to Rudolf Clausius (1822–1888), a German mathematician and physicist.

I say attributed because it was a young French engineer, Sadi Carnot (1796–1832), who first hit on the idea of thermodynamic efficiency; however, the idea was so foreign to people at the time that it had little impact. Clausius was oblivious to Carnot’s work, but hit on the same ideas.Clausius studied the conversion of heat into work. He recognized that heat from a body at a high temperature would flow to one at a lower temperature. This is how your coffee cools down the longer it’s left out — the heat from the coffee flows into the room. This happens naturally. But if you want to heat cold water to make the coffee, you need to do work — you need a power source to heat the water.

From this idea comes Clausius’s statement of the second law of thermodynamics: “heat does not pass from a body at low temperature to one at high temperature without an accompanying change elsewhere.”

Clausius also observed that heat-powered devices worked in an unexpected manner: Only a percentage of the energy was converted into actual work. Nature was exerting a tax. Perplexed, scientists asked, where did the rest of the heat go and why?

Clausius solved the riddle by observing a steam engine and calculating that energy spread out and left the system. In The Mechanical Theory of Heat, Clausius explains his findings:

… the quantities of heat which must be imparted to, or withdrawn from a changeable body are not the same, when these changes occur in a non-reversible manner, as they are when the same changes occur reversibly. In the second place, with each non-reversible change is associated an uncompensated transformation…

… I propose to call the magnitude S the entropy of the body… I have intentionally formed the word entropy so as to be as similar as possible to the word energy….

The second fundamental theorem [the second law of thermodynamics], in the form which I have given to it, asserts that all transformations occurring in nature may take place in a certain direction, which I have assumed as positive, by themselves, that is, without compensation… [T]he entire condition of the universe must always continue to change in that first direction, and the universe must consequently approach incessantly a limiting condition.

… For every body two magnitudes have thereby presented themselves—the transformation value of its thermal content [the amount of inputted energy that is converted to “work”], and its disgregation [separation or disintegration]; the sum of which constitutes its entropy.

Clausius summarized the concept of entropy in simple terms: “The energy of the universe is constant. The entropy of the universe tends to a maximum.”

“The increase of disorder or entropy is what distinguishes the past from the future, giving a direction to time.”

— Stephen Hawking, A Brief History of Time

Entropy and Time

Entropy is one of the few concepts that provides evidence for the existence of time. The “Arrow of Time” is a name given to the idea that time is asymmetrical and flows in only one direction: forward. It is the non-reversible process wherein entropy increases.

Astronomer Arthur Eddington pioneered the concept of the Arrow of Time in 1927, writing:

Let us draw an arrow arbitrarily. If as we follow the arrow[,] we find more and more of the random element in the state of the world, then the arrow is pointing towards the future; if the random element decreases[,] the arrow points towards the past. That is the only distinction known to physics.

In a segment of Wonders of the Universe, produced for BBC Two, physicist Brian Cox explains:

The Arrow of Time dictates that as each moment passes, things change, and once these changes have happened, they are never undone. Permanent change is a fundamental part of what it means to be human. We all age as the years pass by — people are born, they live, and they die. I suppose it’s part of the joy and tragedy of our lives, but out there in the universe, those grand and epic cycles appear eternal and unchanging. But that’s an illusion. See, in the life of the universe, just as in our lives, everything is irreversibly changing.

In his play Arcadia, Tom Stoppard uses a novel metaphor for the non-reversible nature of entropy:

When you stir your rice pudding, Septimus, the spoonful of jam spreads itself round making red trails like the picture of a meteor in my astronomical atlas. But if you stir backwards, the jam will not come together again. Indeed, the pudding does not notice and continues to turn pink just as before. Do you think this is odd?

(If you want to dig deeper on time, I recommend the excellent book by John Gribbin, The Time Illusion.)

“As a student of business administration, I know that there is a law of evolution for organizations as stringent and inevitable as anything in life. The longer one exists, the more it grinds out restrictions that slow its own functions. It reaches entropy in a state of total narcissism. Only the people sufficiently far out in the field get anything done, and every time they do they are breaking half a dozen rules in the process.”

Entropy in Business and Economics

Most businesses fail—as many as 80% in the first 18 months alone. One way to understand this is with an analogy to entropy.

Entropy is fundamentally a probabilistic idea: For every possible “usefully ordered” state of molecules, there are many, many more possible “disordered” states. Just as energy tends towards a less useful, more disordered state, so do businesses and organizations in general. Rearranging the molecules — or business systems and people — into an “ordered” state requires an injection of outside energy.

Let’s imagine that we start a company by sticking 20 people in an office with an ill-defined but ambitious goal and no further leadership. We tell them we’ll pay them as long as they’re there, working. We come back two months later to find that five of them have quit, five are sleeping with each other, and the other ten have no idea how to solve the litany of problems that have arisen. The employees are certainly not much…

The post Battling Entropy: Making Order of the Chaos in Our Lives appeared first on FeedBox.